Table of Contents

1.1.1 Location Based Augmented Reality. 13

1.1.2 Vision Based Augmented Reality. 15

1.2 Augmented Reality Games. 16

1.2.1.1 The Eye of Judgment 17

1.3 Massively Multiplayer Online Games. 19

1.4 Massively Multiplayer Mobile Games. 20

1.4.1.1 Professional Game Development Engines for Android. 22

1.5 Relevance of Android for Augmented Reality Mobile Development 23

2 Graphics Library Evaluation.. 29

3.1 Application Requirements. 46

3.3.1 General Information.. 53

3.3.2.4 Inventory Game State. 57

3.3.2.5 Binoculars Game State. 58

3.3.2.6 Group Management and Messaging Game State. 59

3.4 Game Administration and Area Handling. 59

4.1 Device alignment in the virtual scene and the camera display. 61

4.2 Data exchange between mobile devices and servers. 67

4.4 Consistency Management in Networked Real-Time Applications. 71

List of tables

Table 1 - evaluation criteria. 35

Table 2 - JPCT-AE evaluation.. 37

Table 3 - JME3 evaluation.. 39

Table 4 - Ardor3D evaluation.. 40

Table 5 - libgdx evaluation.. 42

Table 6 - Ranked final evaluation results. 42

List of figures

Figure 1 – Layar Browser UI [3] 14

Figure 2 - Junaio Browser UI [4] 14

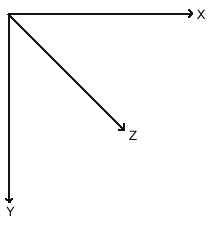

Figure 3 - SmartAR digital water on a table [5] 16

Figure 4 – Eye of Judgment board [10] 17

Figure 5 - PSP with attached camera running Invizimals [11] 18

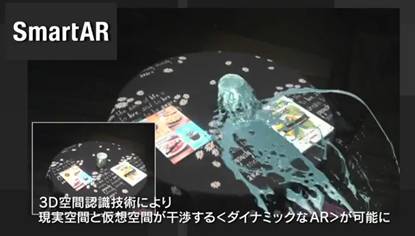

Figure 6 - MMORPG Subscriptions [14] 19

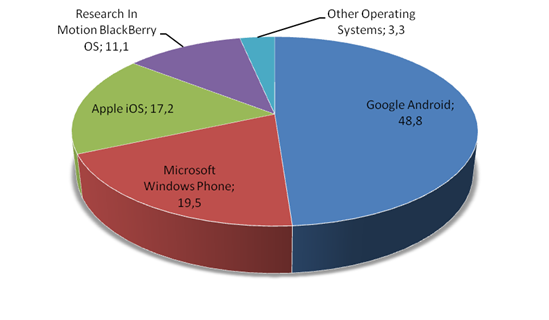

Figure 8 - Mobile OS Market Share in 2015 according to Gartner 24

Figure 9 - Android Tablet market share 2011 / 2015 [32] 25

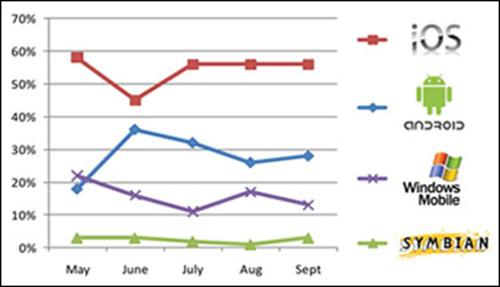

Figure 10 - Mobile OS market share in enterprises in 2010 [34] 26

Figure 11 - Tiobe Programming Community Index [35] 27

Figure 12 - Object highlighting in the prototype application.. 32

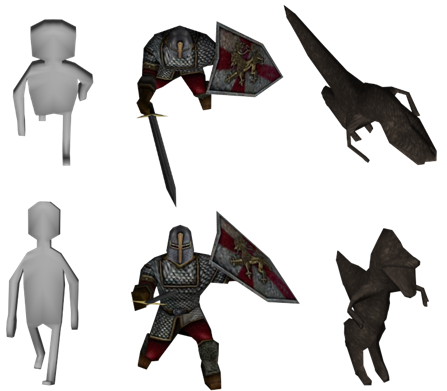

Figure 13 - Models used in the prototype application.. 45

Figure 14 – Areas of Interest and Creatures in Admin UI 48

Figure 15 - CSAs containing several AOIs. 50

Figure 14 - Demonstration of view restrictions by using AOIs. 50

Figure 17 - Quest Administration Interface. 54

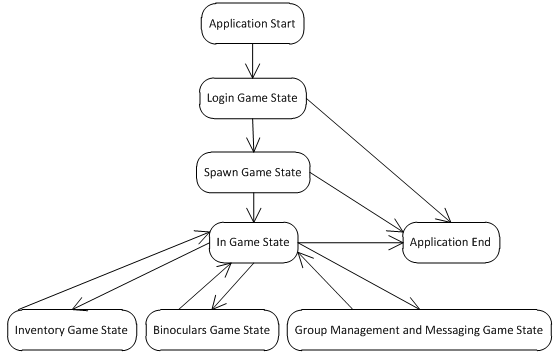

Figure 18 - Diagram of all game states. 55

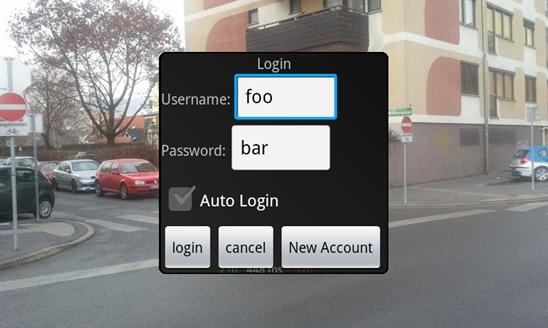

Figure 19 - Login Game Screen.. 55

Figure 20 - Spawn Game Screen.. 56

Figure 21 - Main Game Screen.. 57

Figure 22 - Inventory Game Screen.. 58

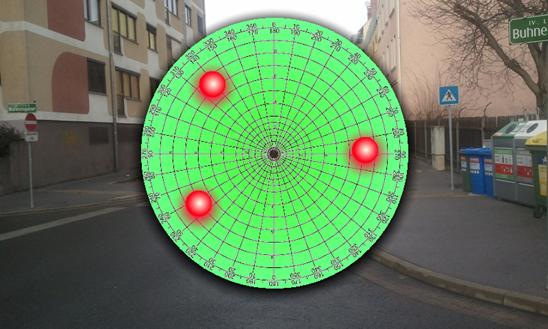

Figure 23 - Binoculars Game Screen.. 58

Figure 24 - Group Manamgement Game Screen.. 59

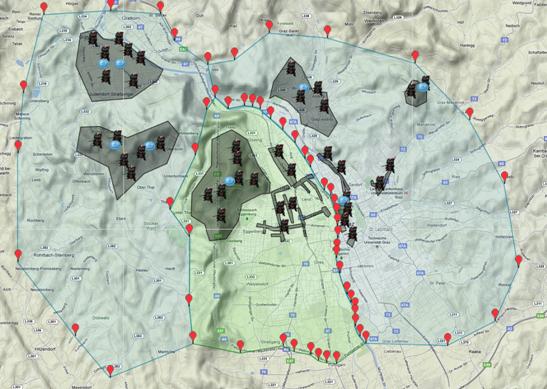

Figure 25 - Selected CSA with two adjacent CSAs around Graz. 60

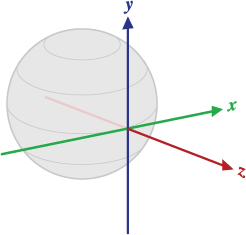

Figure 26 - Coordinate System of the Device Orientation matrix [52] 62

Figure 27 - Cooridnate System of JPCT-AE [53] 62

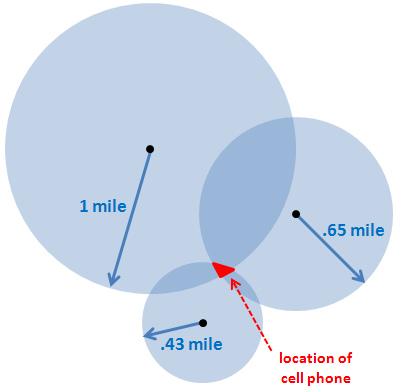

Figure 28 - Mast triangulation between three radio towers [58] 64

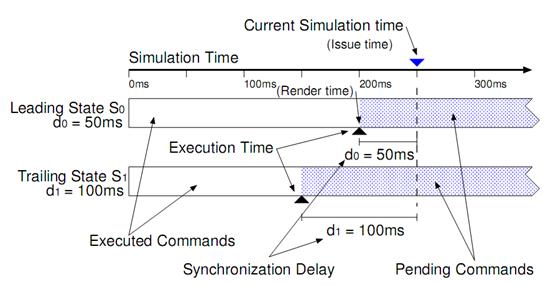

Figure 29 - TSS Terminology. 75

List of abbreviations

|

AI |

Artificial Intelligence |

|

AR |

Augmented Reality |

|

CAS |

Central Account Server |

|

CSA |

Central Server Area |

|

DGPS |

Differential Global Positioning System |

|

FPS |

Frames per Second |

|

GPS |

Global Positioning System |

|

JME |

JMonkeyEngine |

|

JPCT |

Java Perspective Correct Texturemapping |

|

LBAR |

Location Based Augmented Reality |

|

MMMG |

Massively Multiplayer Mobile Game |

|

MMOG |

Massively Multiplayer Online Game |

|

MMORPG |

Massively Multiplayer Online Role Playing Game |

|

OS |

Operating System |

|

POI |

Point of Interest |

|

PSP |

PlayStation Portable |

|

PvM |

Player versus Monster |

|

PvP |

Player versus Player |

|

RTA |

Real-Time Application |

|

RTK |

Real-time kinematics |

|

SGS |

Samsung Galaxy S i9000 |

|

SSL |

Secure Sockets Layer |

|

TSS |

Trailing State Synchronization |

|

TWS |

Time Warp Synchronization |

|

VBAR |

Vision Based Augmented Reality |

|

WotC |

Wizards of the Coast |

|

WoW |

World of Warcraft |

Kurzfassung

Augmented Reality stellt eine der disruptivsten Technologien unserer Zeit dar. Durch sie können neue Möglichkeiten geschaffen werden um Informationen zu betrachten und mit ihnen zu interagieren. Derzeit wird die Technologie jedoch noch nicht in vollem Maß ausgenutzt.

Um dies zu gewährleisten und die Technologie für ein großes Publikum verfügbar zu machen kann Augmented Reality für die Steuerung und Visualisierung von Spielen Videospielen dienen. Diese waren immer schon ein wichtiger Faktor zur Verbesserung von Hardware- und Sofwarekomponenten sowie zur Verbesserung bestehender Interaktionsmöglichkeiten mit digitalen Inhalten.

Derzeit existieren jedoch keine professionellen Spiele die auf Augmented Reality basieren und aufwendige Spielmechanismen wie Massively Multiplayer integriert haben. Deshalb wurde diese Arbeit mit dem Ziel geschrieben zu beweisen, dass es mit dem heutigen Stand der Technik bereits möglich ist, Echtzeit Massively Multiplayer Spiele auf Basis von Augmented Reality zu entwickeln und auch für eine Viezahl von gleichzeitigen Benutzern skalierbar zu halten. Hierfür wird zusätzlich der Prototyp eines kooperativen Echtzeit-Massive-Multiplayer Spiels für mobile Endgeräte entwickelt.

Die Arbeit ist in fünf Kapitel gegliedert: Zunächst wird erläutert, weshalb die Entwicklung von Augmented Reality basierten Anwendungen aus betriebswirtschaftlicher Sicht lohnen kann. Weiters werden technische Aspekte werden eine Evaluierung von Grafikbibliotheken genauer beschrieben. Im dritten Kapitel wird der entwickelte Prototyp detailliert beschrieben und die jeweiligen Sichten von Benutzer und Anwendungsadministrator werden hervorgehoben. Im vierten Kapitel werden weitere bedeutende Aspekte für die Implementierung genau dargelegt, und mit dem fünften Kapitel werden abschließend die Resultate besprochen.

Abstract

Augmented Reality is one of the top ten disruptive technologies at the present time. The technology promises to deliver new ways of consuming and interacting with any kind of information. While this technology still does not seem to be used at its full potential, video games are considered to deliver augmented reality to a large audience. Video games have always been a major driving factor in improving present hardware and software solutions alike by delivering better graphics and new ways of user interaction. What is more, video games are a great business factor world-wide and can connect a large number of people no matter where they are from. This connection and interaction between people becomes more common with current trends to use web applications like Facebook or Google+.

As there are no examples of Augmented Reality based games that deliver features that are common in video games, like large-scaled multiplayer modes, the thesis is written with the intention of proving that real-time massively multiplayer Augmented Reality games are possible to create at the present time. Therefore a prototype of a real-time massively cooperative multiplayer game for mobile devices is developed in addition to writing this thesis.

The thesis is structured into five chapters: Firstly, It is highlighted why the development of Augmented Reality based applications on mobile devices is financially feasible. Secondly, technical aspects with a focus on graphics display on mobile devices are laid out. Thirdly, the prototype application is explained in detail. Here, the idea behind creating the prototype is pointed out and both the view of the user and the administrative implementation is explained. Fourthly, important aspects of the actual implementation are structured and all encountered problems are clarified. Lastly, the thesis provides a short conclusion about the results.

1 Introduction

This thesis is written with the intention of proving that real-time massively multiplayer Location Based Augmented Reality (LBAR) games can be developed for currently widely disseminated mobile devices. To proof this idea, a focus is laid on both displaying and interacting with location based information in 2D or 3D as well as synchronizing states and local information with other application participants. In addition, the thesis deals with the business point of view of building large-scaled AR applications.

A prototype of a real-time massively cooperative multiplayer game is developed in addition to writing this thesis to prove that complex AR applications can be developed and used at the current time. A Real-time massively multiplayer game is chosen because games of this genre usually have high demands on both user interactions and on graphical representation capabilities. What is more, massively multiplayer online games have a great business potential, which most likely forms the basis of successfully adopting new technologies to the market.

As developing such an application is rather complex and time-consuming, libraries that facilitate the development are chosen for as many tasks as possible. Therefore, only libraries that are available for free are used. This way, it is assured that the outcome can be reproduced without making large investments.

The thesis is structured in five main chapters: Firstly, it is explained why creating applications like the prototype might be financially feasible to companies. The business relevance of AR and Android centered mobile application development is highlighted with examples and figures. Here a focus is laid on mobile game development. Secondly, the thesis compares freely available graphics engines on Android and highlights requirements and technical problems when developing AR applications on mobile devices. Thirdly, the prototype application is explained in detail. Here, the idea behind creating the prototype is pointed out and both the view of the user and the administrative implementation is explained. Fourthly, important aspects of the actual implementation are structured and all encountered problems are clarified. This chapter is mainly focused on engineering and programming tasks. Whenever possible, solutions for encountered problems are given. Lastly, the thesis provides a short conclusion about the results.

Furthermore, the thesis’ starting point is that Augmented Reality (AR) presents a promising technology for displaying information in a natural and intuitive way to the end user on mobile devices. In this respect, AR stands for a new kind of information visualization that enables the user to interact with information in a simple way. Moreover, the principle of connecting data to specific geographical regions can be easily integrated into AR applications as they aim at augmenting the current world around the device.

1.1 Augmented Reality

AR enables a user to see the reality and supplements it with digital information. According to Azuma’s definition of AR this digital information should be interactive and implemented in 3D [1]. Although AR technically could also include senses like smell, haptic or aural for example, this thesis focuses on AR restricted to vision and localization [2].

The majority of current augmented reality applications display an image of the real world which is usually retrieved from a camera. This camera image is blended with a 3D scene similar to scenes of computer games or to 3D simulation and visualization software like 3DS Max[1] or Blender[2], or at least a 2d scene that is mapped to the 3D environment. The user commonly interacts directly with objects in these virtual scenes or through an on screen user interface. What objects are displayed on the screen depends on the orientation and position of the camera in the real world as well as on the implementation of the AR application. Examples for this are layer-based AR browsers or image-tracking applications like 3illiards[3]. The following subchapters outline two of the most frequently used implementations of commercially available AR applications.

1.1.1 Location Based Augmented Reality

In LBAR applications the positions of displayed objects are determined by the current geographical location of the user and the orientation of the device that runs the application. These applications generally link geographical coordinates to the digital objects and determine their position in the virtual 3D scene by comparing them to the Global Positioning System (GPS) coordinates of the user’s device.

Today LBAR applications are mostly developed for modern smart phones and Tablets as these devices usually include

· A camera for displaying the real world,

· GPS for location retrieval,

· Magnetic field, acceleration and gyroscope sensors for calculating the device orientation, as well as

· High performing central processing units and graphics processing units for 2D / 3D graphics visualization.

In recent years several LBAR development platforms were established. Particularly noteworthy are layer-based platforms like Layar[4] and Junaio[5] which are both available for Android based devices and the iPhone. The main purpose of these applications is displaying any kind of location based information on top of the camera image. These layer-based AR browsers let developers create their own layers (aka channels in Junaio) of content that can be browsed by the user – similar to websites – by using the platform specific browser applications. This way developers and content creators can publish related information – like restaurant guides, or site seeing tips – in their individual layers. Figure 1 presents two ways of using the Layar browser to find interesting locations and Figure 2 shows the UI of the Junaio browser. Besides these aforementioned closed but freely usable platforms, Open-Source AR browsers like mixare[6] are currently available to display location based data depending on the phone’s location and orientation.

|

Figure 1 – Layar Browser UI [3] |

Figure 2 - Junaio Browser UI [4] |

The actual implementation and design of a LBAR application highly depends on the device it runs on. In the past most of these devices were self made or only prototypes that did not make it into the mass consumer market. Nowadays, Smartphones represent the most straightforward devices to implement and run these applications. As GPS signals usually cannot be retrieved in buildings or underground, applications that have to work in such locations need to implement some other mechanics to determine the device’s location. Otherwise, LBAR applications could only be used outdoors. In this scenario, lighting and contrast of the camera image can only be controlled to a low degree. This subsequently constitutes a severe handicap to digital information visualization as blending a scene on top of the camera image possibly further reduces brightness and contrast of both the scene and the images [2]. In addition to continuous changes in lighting conditions AR might not be best suited to be used in outdoor applications. To partially prevent these problems, the AR browser Junaio allows the developer to mix LBAR, which is called Junaio GLUE[7], and Vision Based Augmented Reality through its channels (VBAR).

1.1.2 Vision Based Augmented Reality

In contrast to LBAR, VBAR calculates the 3D scene’s view by scanning the device screen for predefined images. These images are commonly called patterns. Once one of these patterns is recognized in the camera image, the application calculates the orientation and location of the camera relative to the pattern. This way, the screen position of the predefined image becomes the origin in the 3D coordinate systems of the scene.

Unlike LBAR systems, using VBAR applications merely requires a camera and any kind of computer that provides enough processing power for pattern recognition and, possibly, a graphics chip for 2D and 3D rendering.

Today several libraries for VBAR application development exist, ARToolkit[8] and NyARToolkit[9] to be named among others. These libraries are available for the programming languages Java[10] (desktop, Applet, Android), C#[11] (WinForms, Silverlight), ActionScript 3.0[12] (Flash), Objective-C[13] (IPhone) and for both C and C++[5].

In October 2010, Qualcomm released an SDK[14] for creating high performance VBAR applications for the Android platform that allows the use of complex images for pattern recognition. A Unity3D plug-in for creating VBAR Android applications using this SDK is provided as well [6].

Current image recognition software allows for high performance and high precision pattern tracking. Hence, advantages of VBAR systems over LBAR systems are higher accuracy when tracking the position of objects and fewer influences of environmental factors. Furthermore VBAR can be used in buildings while LBAR systems that only use GPS for position tracking cannot be used in these situations.

Advanced VBAR techniques like Sony’s SmartAR[15] even include the environment in the position and interaction calculation after a marker is tracked, as can be seen in Figure 3. This way the location in a room relative to a marker can be calculated even if the marker is not tracked any longer and real objects can be used to interact with the 3D scene. Examples for this are virtual balls that roll on a table and start plunging once they reach the edge of the table, or digital water that flows down a wall and recoils on real objects. Most usually VBAR applications do not force the user to walk too far as the space in which the application is used is limited to a short range around the patterns. Thus LBAR applications will most likely be favored over LBAR applications when they are used to implement short term games or any kind of stationary applications.

Figure 3 - SmartAR digital water on a table[7]

1.2 Augmented Reality Games

Although the idea of AR as a technology already exists for several years [8], implementations in commercial video games barely appeared until recently. Some games showed up with portable gaming consoles like PlayStation Portable[16] (PSP) and Nintendo 3DS. The later one even ships with six AR markers that can be used with preinstalled games like “Target-Shooting” and “Mii-Images” [9]. In contrast to the Nintendo 3DS that already ships with AR games, the majority of large commercial AR games were developed for Sony devices so far.

What is special about computer games is that they tend to push the barriers of hardware and software limitations and introduce new ways to fascinate consumers. Thus games are a major driver in making AR – and any other innovative technology – more popular and well known to the public and, hence, some of these aforementioned games are introduced next.

1.2.1 The Eye of Judgment

In 2007, “The Eye of Judgment”[17] was one of the first commercially available games that incorporate AR in its game design. Sony Computer Entertainment[18] developed this trading card game together with Wizards of the Coast[19] (WotC) for the PS3. The game is played on a square board that is divided into a 3x3 grid. Players need to capture these areas by using spells and creatures that are summoned using playing cards in a similar manner to other card playing games developed by WotC. The implementation of VBAR makes this game unique, though. By using EyeToy to track the playing cards, all battles during a game session are visualized in 3D. Figure 4 demonstrates the visualization of the game board on the PS3.

Figure 4 – Eye of Judgment board [10]

1.2.2 EyePet

EyePet[20] is another AR game. In this game, which is available for the PS3, the player is responsible for a little pet that is projected over a video stream of the real word using EyeToy and VBAR. Similar to Tamagotchi[21] the player has to feed the pet and he or she has to play with him. Most interactions with the pet happen by using pattern and image recognition as well as motion control. This way the user can play games, change the pet’s appearance or check its health status.

1.2.3 Invizimals

Invizimals presents another VBAR game available for the PSP. The game is based on the Pokemon[22] game principle. By using a PSP, players have to find creatures called Invizimals in the real world. Once users are near to an Invizimal, they can use a graphical pattern to track an Invizimal on the PSP display for capturing it. These creatures can either be collected and raised or traded with other players. Depending on environmental factors like the color of surfaces that are visible in the camera image or the time of the day, the application determines if an Invizimal is nearby. Figure 5 shows a PSP that blends two Invizimals over the camera display via VBAR.

Figure 5 - PSP with attached camera running Invizimals [11]

Finally a player can fight with his own Invizimals against other Invizimals that are played by artificial intelligences (AI) or other players. Therefore the pattern is always used to display the Invizimal, bet it for trading or in a battle. During a fight, the player can interact with the 3D scene via key input, shaking the device and blowing [12].

1.3 Massively Multiplayer Online Games

Massively Multiplayer Online Games (MMOGs) are games in which up to hundreds of thousands of players can interact with each other within the same virtual world. First MMOGS are dated back to the late 1970s. This genre started being popular with games like Multi-User Dungeon – a text-based role playing adventure game – and have become quite popular all over the world ever since [13].

Massively Multiplayer Online Role Playing

Games (MMORPG) – which is probably the most popular type of MMOGs – rose in

popularity since 1998, peeking at around 22 million subscribers in 2011 according

to mmodata.net. Figure 6

outlines the increase in MMORPG subscriptions dated from 1997 to 2011.

Figure 6 - MMORPG Subscriptions [14]

Although text-based MMORPGs from the 1980s used to be free, current popular MMOGs generate large returns to the companies that develop and maintain them. While computer games are commonly charged once per copy of the game MMORPGs are usually charged on a monthly basis. Thus, building strong and loyal relationships with their customers is even more important for companies that sell MMOPGs than for other games of different genres.

Due to a permanent growth in the number of subscribers and a continual income through micro transaction based fees on a monthly basis, successful MMOGs present a source of a high economic value [15]. World of Warcraft (WoW) – the most popular MMORPG today – generated a net revenue of 395 million US Dollar between March 2010 and March 2011 [16].

What should still be considered, however, is that MMOGs oftentimes require central servers which are provided by the respective software development companies. While MMOGs generate a large return on investment, games of this genre require large investments in the first place as well as continuous investments in maintaining both the game software and the central administrative hardware. To be more precise, depending on the type of a MMOG, synchronizing game states to maintain a consistent world between game participants might present a non trivial problem. For example, maintaining a persistent state in a turn based game like Atlantica Online[23] is usually easier to implement than a state maintaining system in real time games like Lord of the Rings Online[24]. In the later game the order of actions depends on how fast a player reacts. Thus, MMOGs that allow the participants to execute actions concurrently need to implement some sort of state maintaining system such as “Adaptable Dead-Reckoning” [17].

1.4 Massively Multiplayer Mobile Games

According to a survey of mobile phone users in the US and UK 27% of mobile phone owners have a Smartphone and 21% have a web-enabled phone [18]. The number of games played on mobile phones increased dramatically since 2009 and Smartphones further reinforce this trend. Yet the majority of gamers played less than one hour per week in 2009 and these numbers did not change considerably. However, in contrast to the time games are played on mobile phones, net revenue generated by mobile phone games increased dramatically [19].

The adoption of Massively Multiplayer Mobile Games (MMMGs) might lengthen the time people play with games on mobile phones, though. MMMGs are MMOGs that run on mobile devices. Due to recent mobile hardware advancements, these emerging mobile multiplayer platforms are possibly going to be of high market relevance in the near future.

There are already several MMMGs available on the Android market at the time of writing. For example, Parallel Kingdom[25] – a location based massively multiplayer game in which players can fight monsters and claim territory in the real world – already attracts more than 500.000 people worldwide [20].

Order & Chaos Online[26] – a WoW like game running on the IPhone 3GS or later, on the IPad and on several Android based Smart Phones – generated revenue of more than one million US Dollar within 20 days after its official release [21]. Figure 7 shows a screenshot of Order & Chaos Online.

Besides MMMGs that are

developed to be played on mobile devices like Emross War[27]

or Pirates of the Caribbean[28],

ports from desktop MMOGs like Tibia[29]

are currently available as well. Porting older games that do not need large

screens to mobile devices might be financially tenable as the smart phone

market could open up new possibilities for application development and

application distribution.

Besides MMMGs that are

developed to be played on mobile devices like Emross War[27]

or Pirates of the Caribbean[28],

ports from desktop MMOGs like Tibia[29]

are currently available as well. Porting older games that do not need large

screens to mobile devices might be financially tenable as the smart phone

market could open up new possibilities for application development and

application distribution.

1.5 Professional Game Development Engines for Android

Great effort has been undertaken to push game development on mobile devices recently. At the present time, several commercial game development tools and game engines exist that run on Android devices.

First of all, there exists an Android 3.x optimized version of the UnrealEngine[30], a widely used gaming engine that has been used to create a variety of commercial games, with games of the Unreal Tournament[31] and the Mass Effect[32] series being some of the most well known titles. Primarily, the engine was created for developing games for personal computers and video consoles. Now, the engine runs on Google Android, Microsoft Windows, Microsoft Xbox 360, Mac OS, Apple iOS, Sony Playstationx 3, Sony PlayStation Vita, and under Adobe Flash. While the engine was designed for creating games in the first place, it has already been used for creating films and television and simulation and training software. Further, some of the highlights of the supported features are advanced character animation, pre-configured artificial intelligence systems, game scripting, networking, in-game cinematics and a multi-threaded rendering system[22].

The Unigine[33] is an advanced real-time 3D engine for games, simulation, visualization, serious games and virtual reality systems that supports NVIDIA Tegra2[34] based Android devices. This dual-core chip includes an ultra-low power NVIDIA GeForce GPU and is often implemented in tablet computers. There are only but a few Smartphones, like the Samsung Galaxy R[35], that implement this processor, though. According to their website, the engine can be licensed on a per-case basis with an average deal being about 30.000 USD per project [23], and enables developers to create applications that run on Microsoft Windows, Linux, Mac OS, Sony Playstation 3, Apple iOS, and Google Android. The engine eases game development by providing a graphics engine for delivering photorealistic graphics, implement live physics, handles dynamic data loading for large scenes, supports scripting, and ships with high quality in-game graphical user interfaces [24].

Finally, Unity3D[36] is a game development tool that eases game development by providing libraries for high performance graphics rendering, lighting effects, terrain generation, support for Allegorithmic Substances[37], physics, audio, and networking. The tool generates applications that run on the unity’s own web player, Adobe Flash, Microsoft Windows, Microsoft Xbox 36, Mac OS, Apple iOS, Google Android, Nintendo Wii, and Sony PS 3. While the Unity3D base development kit is available for free, the Android plug-in has to be purchased for 280€ in the base package and for 1050€ in the pro package, respectively [25]. Furthermore, a plug-in for Unity3D is available that implements VBAR behavior directly into Android applications, letting the developer use high resolution images as AR markers. What is even more interesting, SonyEricsson[38] referred to articles about how to create games for their Xperia PLAY[39] Smartphone using Unity3D in their official blog[26]. These articles describe how to use the Xperia PLAY GamePad in Unity. Further, SonyEricsson released several white papers and articles about developing games for their Xperia PLAY devices and demonstrate how to use optimize the programming code [27].

1.6 Relevance of Android for Augmented Reality Mobile Development

According to [28] AR is one of the top ten disruptive technologies for 2008 to 2012. Although hand-held devices are limited in processing and memory capabilities, they have realistic opportunities and to be suitable for mass-market AR applications according to [2].

Thus, Android based devices seem to be a good choice for developing AR applications as they most certainly feature all hardware required to use AR applications like cameras, GPS, sensors and processing power. Furthermore, Android possibly becomes one of the most widely used mobile OS within the next years. With a market share of between 38.5% and 48% worldwide among Smartphones Android devices already deeply penetrated the smart phone market in 2011. What is more, Gartner predicts that Android market share will increase to 50% in 2015 [29; 30]. Figure 8 shows the market share of mobile OS in 2015 as predicted by Gartner:

Figure 8 - Mobile OS Market Share in 2015 according

to Gartner

Figure 8 - Mobile OS Market Share in 2015 according

to Gartner

And, while Android is only dominant at the Smartphone market at the present time, the OS’s market share among tablet computers will increase to around 36% until 2015 according to [31]. On the one hand, tests accomplished with the Motorola XOOM[40], which has a 10.1 inch display and weights 730g, indicated that large tablets are not quite handy to run LBAR applications, so they might rather be used for stationary VBAR installations. On the other hand, as the XOOM is one of the heaviest tablet computers and there are smaller tablets available, such as the Sony Tablet P[41], or even semi tablets like the Samsung Galaxy Note[42], new Android based Tablets could likely be used to run LBAR applications in the future as well. Figure 9 outlines the Android market share for tablets.

Figure 9 - Android Tablet market share 2011 / 2015 [32]

So far in this thesis, AR has only been considered in the context of the mass consumer market. But, as AR is a technology that is intended to improve data visualization, it is relevant to enterprises as well. In October 2010, [33] showed that the Android market share continued to grow rapidly in the enterprise mobility market sector, although still being outclassed by Apple products. Figure 10 outlines the mobile OS market share according to Good Technology in 2010.

In the context of enterprises and enterprise application development, Android can likely be integrated well into current business solutions as Android builds on open standards and interfaces. The operating system (OS) kernel is based on LINUX and the source code of most Android versions, except all 3.x versions, is available for free. Thus, the code could be adopted to satisfy specific business requirements if needed.

Figure 10 - Mobile OS market share in enterprises in 2010 [34]

Further, when it comes to differentiate between Android and iOS from the software development perspective, the language in which the respective applications are developed presents an important factor. Due to the fact that Java is used as the main programming language on Android devices, a widely applied programming language is at every developer’s disposal. Android enables developers to reach a broad audience and Java is currently one of the most popular and best supported programming languages world-wide. Similar to C#, the Java programming language is already used in a variety of desktop-based and web-based business applications. Hence, the combination of Java and Android enables a multitude of hobbyist or professional software developers to create and distribute mobile applications at a large number alike, without forcing them to invest many resources in adapting the source code to different runtimes on end devices. What is more, the great popularity of Java among software developers results in a large reusable code-base which allows incorporating latest technology into applications. In this respect the popularity of Java is laid out by Figure 11.

Figure 11 - Tiobe Programming Community Index [35]

Aside of business applications, mobile game development could become more popular in the future. With games gaining a continually growing market share, the mobile game sector will experience the largest growth opportunity [36] in the near future. In the United States most revenue generated by mobile games can directly be assigned to Android and iOS games. According to [19] the total revenue generated by mobile games in US in 2011 is shared between Android & iOS (58%), Nintendo DS (36%), and Sony PSP (6%). This stands in contrast to only 19% of revenue of Android and iOS games in 2009.

Thus, with a rapid increase in Smartphone market share and a rising popularity in mobile games, starting with mobile AR game development could hold great opportunities at the moment [37]. As outlined in chapter 1.2 VBAR has become more popular for developing serious games. With increasing incorporation of hardware that is needed to use LBAR in smart phones, LBAR might become more widely used as well.

What is more, creating games that support networking probably becomes more common among game developers due to future increases of internet access through mobile phones, which might even overtake PCs as the most common web access device worldwide [38]. Nevertheless, the gaming market might present one of the weaknesses of the Android operating system in comparison with other mobile platforms. While several free to use libraries for Android game development exist, many of them are still not stable. A more in depth report of freely available Android game development frameworks is provided in chapter 2.

1.7 Discussion

Chapter 1 clearly highlights that the Android platform bears great potential to software development companies due to the number of current users and the ease of application distribution. This is true for software targeting business and the mass consumer market alike, and the potential is even growing in the near future according to predicted sales figures of Smartphone devices until 2015. While it is estimated that the android market currently exceeded to contain 300.000 different apps [39] there are near to none LBAR games to be found. While several VBAR applications have been uploaded to the market after Qualcomm released their VBAR SDK [40], only Parallel Kingdoms can remotely be considered as a LBAR game. This leads to the conclusion that most developers either do not think that AR in conjunction with location services improves the game experience overall or that they do not think that games using this combination of technologies is currently possible to implement in a user-friendly way. The latter argument is dealt with in detail in chapter 2 and chapter 4.

What is clearly in favor of considering building applications that run on Android is that there are several game development tools that allow creating a game once and then porting the final product to several different platforms out of the box. Thus, developers can build high quality games without limiting their product to be running on a single platform. Here is to mention that, while building exactly the same game for multiple platforms is not that problematic, incorporating AR into the mobile version of a game could possibly completely change the game.

2 Graphics Library Evaluation

While writing this thesis, it became clear that there are no official mobile frameworks that provide all functionality to develop a LBAR game out of the box on Android. However, most characteristic LBAR features like determining the current user location or the viewing angle of the device were already integrated in the OS. Thus, the biggest challenge was to seamlessly display content based on this data. Especially displaying 3D content forms an integral part of the game prototype. To prove that complex AR games can be built with freely available tools, at least one graphics library needs to be presented that supports basic functionality to display an AR application. Therefore, four graphics libraries are evaluated and compared. The best library is used for developing the prototype application. To accomplish this goal, a utility value analysis has been carried out, utilizing the criteria listed below. Every non mandatory criterion is rated with 0 (worst) to 10 (best) according to the degree to which the requirement is met. All ratings are then multiplied with a factor that reflects the importance of the criterion. All tests are dated to around first of July 2011.

2.1 Evaluation Criteria

To determine what graphics library is best suited for developing the prototype application, several evaluation criteria have to be declared. Some of these criteria are mandatory and some are optional. Further, optional criteria are weighted according to their importance for building a 3D AR application for mobile phones. These criteria are listed below, including an explanation of why they are declared.

Availability on Android

This diploma thesis focuses on the development of massively multiplayer AR games that are playable on devices using the Android OS. All tests have been carried out using the Samsung Galaxy S I9000 (SGS) and Android 2.2. As a consequence any evaluated library needs to support Android 2.2 or newer OS.

The SGS has been chosen as it is an above-average Android Smartphone. At the time of writing this thesis the SGS is still one of the more advanced Android-based devices available on the market. Now, it is even more affordable as its successor is already obtainable. What is more, the SGS is a very popular smart phone which has been sold over 5 million times until October 2010 and therefore can be seen as a serious benchmark for many Smartphones that are currently in use [41].

Java Based Libraries

Developers can either create applications in Java or in C / C++ using the Native Development Kit (NDK). The majority of application code is usually written in Java while only specific performance critical code could be written in C++ using the NDK. While some companies claim that writing native code with the NDK for specific tasks might result in up to 4 times increased performance when optimizing the application for their respective hardware [42], there is no guarantee that writing native code will result in any performance improvements [43]. In contrast, mixing Java and C++ code possibly increases the complexity of keeping the source code clean and easy to read. Thus, developing applications entirely using the programming language Java is declared as a precondition for evaluating a graphics library.

Location Based Augmented Reality Behavior

To develop an AR game, the chosen library must either already implement functionality to show the camera image as a background or at least allow the developer to add this functionality. Furthermore the view in the 3D scene needs to be alignable with the orientation of the mobile phone and 3D objects have to be placed correctly depending to the physical location of the Smartphone.

License model

In the process of writing this diploma thesis only libraries that are open source - or libraries that are at least available for free - are evaluated. Thus it is ensured that the libraries were adaptable if no AR functionality was provided at this time.

Loading of 3D models

It is mandatory that a graphics library provides functionality to import and display 3D models that are created with modeling tools like 3DS Max or Blender3D. Although no restriction to the actual file formats support are made in advance, libraries that could handle common and open formats like Collada[43] (DAE file format) are favored. This is reflected by the rating as a consequence.

Loading of animated 3D models

AR combines digital applications with the real world. Without using animated models, most applications would appear very unrealistic and somewhat strange, which stands in contrast to AR. Therefore importing and displaying animated models is a mandatory feature.

2D User Interface

User interfaces that overlay the camera image and the 3D scene can be used to show information to the user or provide interactive elements like buttons or text fields. Implementations of user interfaces by graphics libraries are helpful but not mandatory. If no easy way of displaying 2D data is provided by the library itself, a developer can still overlay a Canvas object provided by the Android platform to perform any kind of 2D drawing. There is a drawback when drawing on a Canvas surface, however. By using this Canvas, the developer possibly needs to synchronize the 2D drawing actions with other parts of the application or at least assures that the drawing to the canvas is performed in the same thread as the drawing to the 3d scene as well as the user input handling.

Post Processing

During the development of the prototype it became clear that by overlaying the camera image with 3D models, it sometimes might be difficult to determine the appearance of the model and its presentation on the device display. Especially when picking objects in a scene, the user possibly expects the selected object to be highlighted in some way. Therefore post processing or at least overlaying the model with a billboard that is textured with a glowing image could increase the usability of the application dramatically. As this possibly results in better usability and a better look of the game, post processing is considered to be a helpful feature but it is not mandatory for a library to be selected. Libraries that provide the use of shaders for post processing get highest ranks whereas libraries that ease to use of image overlaying and billboarding are still favored over those that do not implement either of these features.

Although the evaluation of shaders and their applications in the tested frameworks could not be tested, enhancing usability by highlighting selected objects in the game is implemented using billboards and can be seen in Figure 12.

Figure 12 - Object highlighting in the prototype application

Input handling

Input handling is an important topic as games most usually are highly interactive. Android ships with an implementation of accessing all kinds of events like touch events or key events. These events, however, are fired in a different thread than the main game thread and need to be synchronized. Furthermore, events fired in games oftentimes are more sophisticated than a user simply pressing the touch screen. Although user input is not directly related to the graphics presentation, real-time applications frameworks oftentimes include abstractions of user input management and thus, implementing user input mechanisms in the library would be considered to be a helpful feature.

Interaction with the Scene

While user input handling forms the basis of interactive applications, this feature alone is not sufficient to provide the kind of interactivity AR applications could deliver to the user. Therefore, the application should provide ways for directly interacting with the scene by selecting 3D objects that are displayed on the screen. This picking functionality should be implemented by the chosen library, but it is not mandatory as long as it can be implemented by a developer within a short period of time.

Support

Good support is important to evaluate a library within a short period of time and to learn how to use it. Most essentially, any kind of online presence where someone can ask questions is appreciated. Besides forums and blogs, interactive demos in conjunction with providing its source code can help to understand and to use the library the way it is expected by its creators. Although this criterion is not marked as mandatory, support from the library’s creators or its community is considered to be necessary to evaluate the necessary functionality within the limited time of writing this diploma thesis.

Performance

Besides being able to display content correctly, a library needs to perform all drawing actions at around 30 times per second. Once the frame rate drops below 30 frames per second (FPS) the animations possibly do not look smooth and, considering AR applications, the camera direction might not be synchronized with the orientation of the mobile device. Especially the latter situation might cause problems as the orientation synchronization is fairly noticeably by the user due to frequent camera movements that happen frequently when someone carries a Smartphone.

To be more specific, the performance of a game can be divided into – at least – two parts: The rendering of the game scene and the update of the game state. Whereas rendering of the game scene deals with drawing the 3D objects to the OpenGL surface in the application, updating the game state is about mathematical and logical operations like calculating AI or physics, moving objects in the scene, calculating user input and so on.

In the most straightforward form, updates to the game state and drawing to the OpenGL surface can be done equally. This may lead to decreased application responsiveness when the rendering computations get heavy. Once the frame rate drops to about 20 FPS or lower, input handling or game logic update cannot respond as fast as the user might expect.

To prevent the game logic to suffer from heavy computational rendering, the logic and rendering can be separated. For example, a game development framework could call its update methods a fixed amount of times per second – like between 30 and 60 times – and call the draw methods as fast as possible by default. When the drawing operations exceed the hardware capabilities, the application might adopt its rendering updates as needed.

Frameworks that implement mechanisms for keeping the logic updates constant are slightly favored but this is not a mandatory criterion as the logic could be added manually and applications can be built without it, anyway.

However, the performance of the frameworks is the most crucial non mandatory criterion as improving the performance of a library might possibly be more difficult than – for example - writing custom input handling or custom object picking behavior.

Finally, all criteria and their impact on the evaluation are listed in Table 1:

|

Criteria |

weight |

|

Availability on Android |

Mandatory |

|

Java Based Libraries |

Mandatory |

|

Location Based AR Behavior |

Mandatory |

|

License model |

Mandatory |

|

Loading of (animated) 3D models |

Mandatory |

|

Built-in AR behavior |

0.5x |

|

Open source license model |

0.5x |

|

Diversity of accepted 3D model types |

0.5x |

|

2D User Interface |

1x |

|

Post Processing |

1x |

|

Input handling |

0.75x |

|

Interaction with the Scene |

0.75x |

|

Support |

1x |

|

Performance |

4x |

Table 1 - evaluation criteria

2.2 Library Evaluation

The former chapter outlined criteria that need to be checked for each evaluated library. This chapter lists the evaluated libraries including the final results of all evaluations and concludes with an explanation of what library was chosen. Further, only libraries that meet all mandatory criteria are listed.

To provide a useful comparison, a scene consisting of a light source and up to three instances of an animated model is set up with each tested graphics library. The actual number of the animated models may vary depending on the graphical capabilities of the respective library. Furthermore, all additional listed criteria are implemented as far as possible.

2.2.1 JPCT-AE

Java Perspective Correct Texturemapping (JPCT) AE[44] is an Android porting of the freely available JPCT graphics library for java based desktop and web applications. At the time of writing, JPCT-AE 1.23 was the current release and was evaluated.

Besides features such as loading models from 3DS[45], ObJ[46] and MD2[47] files, JPCT-AE supports collision detection, geometry based picking, vertex lighting and billboarding. Although not tested in the course of this master thesis, the library seams to support post processing on Android as well according to [44]. Finally, the library provides some helper functions to compute the position of 3D objects to screen coordinates and back. This is helpful for picking purposes as actually hitting a model on small devices proves to be quite difficult. Thus, when a user wants to pick something, all objects that can be picked are checked for their screen coordinates and the application can detect if the user touched somewhere near to a pickable object. Hence, a user does not really hit an object but only picks near to it.

Furthermore the library supports skeletal animation either using the BONES[48] library or the SkeletalAPI[49] library as well as keyframe animations. What makes this library really outstanding in comparison to other evaluated libraries is the relatively high performance of the animated models. By using MD2 keyframed models, the animated example model could be added and drawn up to 10 times in the scene without causing the application rendering to drop below 30 FPS. After loading and animating three models using the SGS and the BONES library, the application still maintains around 20 FPS. In addition, by using existing methods for drawing images on top of the scene and using a library Add-On to display text, a developer can build good looking user interfaces and still maintain a high frame rate after all [45].

What decreases the value of this library is the fact that at the time of writing, no support for custom input handling exists. Therefore, the developer needs to use and synchronize the native Android event handling system with the application. Furthermore, the library does not provide any functionality to align the virtual scene with the device orientation out of the box. Fortunately, some members of the community provide examples of how to implement this functionality [46].

In contrast to the aforementioned drawbacks, however, the library is well supported through a forum and the documentation is updated frequently. Unfortunately, both the desktop library and the android library do not ship with many examples. Table 2 concludes with a summary of the JPCT-AE evaluation:

|

Criteria |

Degree of Performance |

Value |

|

Built-in AR behavior |

0 |

0 |

|

Open source license model |

0 |

0 |

|

Diversity of accepted 3D model types |

10 |

5 |

|

2D User Interface |

5 |

5 |

|

Post Processing |

5 |

5 |

|

Input handling |

0 |

0 |

|

Interaction with the Scene |

10 |

7.5 |

|

Support |

10 |

10 |

|

Performance |

10 |

40 |

|

Total |

|

72.5 |

Table 2 - JPCT-AE evaluation

2.2.2 JMonkeyEngine

The JMonkeyEngine[50] (JME) is an open-source community centered 3D game engine written in Java. Games that are created with JME can run as desktop applications, applets, or Android applications. Unlike JPCT, which is a graphics library, JME provides a full featured application structure that implements a unified input handling system, object picking, a mature scene graph API, a jBullet[51] physics engine implementation, an integrated graphical user interface (Nifty-GUI) and networking mechanisms, among others. Furthermore, the engine eases the use of shaders, lighting effects and special effects for post processing and 2D filter effects [47].

JME supports loading models from OBJ files and animated models from OgreMesh XML[52] and Collada files. These files can further be converted to a JME binary model format to reduce the file size and, possibly, improve loading performance. Using this binary format for 3D models subsequently is the only possibility to load 3D models on the Android platform at the moment.

At the time of writing, the JME team is working on the JME version three (JME3) which contains an Android port of the engine and some simple examples of how to use the engine on the Android platform already exist. Loading animated models, however, caused critical problems. It was not possible to load animated models from Collada files and importing models from OgreMesh files caused some rendering issues. As a consequence, displaying a single model caused partially flickering of the whole scene and the rendering performance decreased to around 18 FPS. Although the community support is all right, there was not enough time to solve these problems.

After all, the JME port to Android does not provide any functionality to align the scene with the device, but porting the code from the JPCT-AE AR demo application worked fine. Although JME provides a variety of features for desktop and applet applications, the Android port currently provides rather basic functionality and therefore cannot be used to develop the prototype application.

Table 3 concludes with a summary of the JME3 evaluation:

|

Criteria |

Degree of Performance |

Value |

|

Built-in AR behavior |

0 |

0 |

|

Open source license model |

10 |

5 |

|

Diversity of accepted 3D model types |

10 |

5 |

|

2D User Interface |

5 |

5 |

|

Post Processing |

5 |

5 |

|

Input handling |

10 |

7.5 |

|

Interaction with the Scene |

10 |

7.5 |

|

Support |

10 |

10 |

|

Performance |

2.5 |

10 |

|

Total |

|

55 |

Table 3 - JME3 evaluation

2.2.3 Ardor3D

Ardor3D[53] is an open source 3D game engine written in Java. After first starting out as a fork project from JME, Ardor3D provides a high level scene graph API for developing high performance games and interactive graphics applications.

Ardor3D resembles to JME in respect to its features and the overall application structure. It ships with a custom input handling systems, a custom binary format for 3D models, an API for skeletal animation via Collada files, texture generation, collision detection, particle generation, effects, and a GUI system, among others.

Like JME, the Android port of Ardor3D might be considered to be in its earlier stages. Although the library works fine for rendering simple 3D scenes, the performance of animated models is not sufficient to create an extensive game. By rendering a single instance of the custom animated model, the application frame rate drops to around ten FPS on a SGS. What is more, there are no examples of how to port some of the eye-catching effects like bloom post processing from the desktop applications to Android. Although creating shader based effects might be available in Ardor version 0.7 there was not enough time to figure out how to implement them. Developing simple applications that only use static 3D models could work without problems, though. Finally, using the device sensors to align the virtual world with the device orientation was achievable via custom code.

Table 4 concludes with a summary of the Ardor3D evaluation:

|

Criteria |

Degree of Performance |

Value |

|

Built-in AR behavior |

0 |

0 |

|

Open source license model |

10 |

5 |

|

Diversity of accepted 3D model types |

10 |

5 |

|

2D User Interface |

10 |

10 |

|

Post Processing |

0 |

0 |

|

Input handling |

10 |

7.5 |

|

Interaction with the Scene |

10 |

7.5 |

|

Support |

10 |

10 |

|

Performance |

2 |

8 |

|

Total |

|

53 |

Table 4 - Ardor3D evaluation

2.2.4 libgdx

libgdx[54] is an open source framework that lets the developer create 2D and 3D real time applications which can be deployed as desktop and as Android applications as well as applets using Java. Nearly the same source code can be created for both desktop and Android based devices. Hence, the main goal of the library is that with just some small code changes applications can be used on all platforms [48]. Unlike the former mentioned engines and frameworks, the libgdx project does not intent to abstract the development of graphics computational applications using scene graph APIs or the like but provides several helper classes to ease working with OpenGL ES 1.0, 1.1 and 2.0.

Therefore, the library ships with importers for 3D models delivered in the OBJ format and animated models delivered in the MD5 format and the OgreXML format as well as helper classes for using key frames or skeletal animations. Tests on the SGS showed that three instances of one animated model can be displayed at once at around 20 to 24 FPS. However, it was not possible to pick any of these models correctly in a demo application.

Apart from Helper classes for 3D application development, libgdx ships with classes for drawing bitmap fonts, sprite rendering, computing 2D particle systems, a library for CPU based bitmap manipulation, input and sound handling, and a 2D scene graph including a tweening framework.

If a developer has no former knowledge about OpenGL ES the usage of this framework is not as straightforward as that of other libraries, like JPCT-AE. However, the library ships with enough helper classes to fast and simply build games for the Android platform. No evaluation on shader implementation could be done due to time limitations, although the developers write in their project description that they can be used [48].

Just like the other evaluated frameworks no default implementation of aligning the virtual camera with the device orientation exists, but the application could be extended via custom code.

Table 5 concludes with a summary of the Ardor3D evaluation:

|

Criteria |

Degree of Performance |

Value |

|

Built-in AR behavior |

0 |

0 |

|

Open source license model |

10 |

5 |

|

Diversity of accepted 3D model types |

10 |

5 |

|

2D User Interface |

5 |

5 |

|

Post Processing |

10 |

10 |

|

Input handling |

10 |

7.5 |

|

Interaction with the Scene |

0 |

0 |

|

Support |

10 |

10 |

|

Performance |

5 |

20 |

|

Total |

|

62.5 |

Table 5 - libgdx evaluation

2.2.5 Evaluation results

The final results of all evaluated libraries are ranked in Table 6:

|

JPCT-AE |

72.5 |

|

Libgdx |

62.5 |

|

JME |

55 |

|

Ardor3D |

53 |

Table 6 - Ranked final evaluation results

JME3 and Ardor3D are both extensive and well supported frameworks for building large scaled desktop and browser based games. Although community support for both libraries is great, their Android port is hardly documented and looks rather basic at present.

In contrast, JPCT-AE and libgdx follow a more basic approach of developing real time applications. JPCT-AE mainly presents a graphics library that does not forces the developer to follow any coding standard for building an application, whereas the focus of libgdx is laid on easing working with OpenGL ES by providing helper classes for common task when building real time applications.

As a result, JPCT-AE and libgdx seem to work better on the Android platform than the current ports of JME and Ardor3D in general. Although the former libraries are not as feature rich as the latter ones, most of their features work reliably on Android 2.2 and above. What is more, JPCT-AE and libgdx currently have an overall better performance in regards to displaying and animating 3D models.

Due to the way libgdx is designed, all of the provided code examples should work on Android, putting it ahead of the other libraries. After all, problems occurred when trying to pick animated models which could not be solved in time. In addition, working with libgdx could be more tedious and less productive than working with other evaluated libraries if the developer does not have extensive knowledge about OpenGL ES.

In contrast to libgdx, JPCT-AE provides simple ways of building, animating and manipulating 3D scenes without knowing anything about the Android interfaces to OpenGL ES. The overall performance seems to be sufficient for building the AR prototype and the library provides everything that is needed to create full featured real time graphics applications on the Android platform. Therefore, the prototype application is built using JPCT-AE.

2.3 Discussion

Although several free Java-based libraries are available for developing 3D real-time applications (RTA) on Android, many of them are still prototypes. Half of the libraries that were evaluated in the course of this master thesis did only implement basic functionality or even lacked some important features.

Furthermore, throughout comparing all previously listed frameworks it is noticeable that there is a lack of common formats for importing 3D models. Available formats across all libraries are MD2, MD5, 3DS, DAE, OgreXML and OBJ, whereas DAE was the best supported format for animated models. As most of these formats are either open or at least well supported this did not cause many problems, though.

In contrast, performance caused the most divergence among all libraries. Whereas models in desktop applications can have up to 10.000 polygons and sometimes even more [49], the Flatman model developed for the prototype application – which was used for all performance comparison examples – merely had a poly count of 280. Although a model that was used in 1998 for displaying a zombie in Half-Life had more than three times as many polygons, only JPCT-AE and libgdx could render it at more than 30 FPS on a modern Smartphone. Further, JPCT-AE was the only library that could render ten models at once while still maintaining a sufficient frame rate for a game to be playable. Fortunately, while it is impossible to render and animate high poly models from current computer games, there probably will not be a need to do this on mobile phones as the differences to low poly models might not be noticeable on small screens.

To further test the display capabilities of JPCT-AE, three different models were used in the prototype application. Two models were created for this purpose and one was downloaded from turbosquit[55]. The first one is the flatman model which has a poly count of 280 and does not use textures. This model was mainly created for performance comparison. The second model is the dino model which has a poly count of 1038 and uses a texture with a size of 512x512 pixels, downloaded from bildburg[56]. Finally, the swordsman model is a low poly model that is used in the open-source game Glest[57]. It has a poly count of 532 and is mapped with a 512x512 texture containing the images for the body, the sword and the shield. All states of the last two models were displayed and animated in an AR application by still maintaining 40 FPS. Therefore, it can be said with a measure of certainty that displaying and animating good quality low poly models is possible in 3D games created with JPCT-AE. All models are displayed in Figure 13:

In contrast to the performance downside of most libraries, cross-platform development can be seen as a huge advantage of creating Android based RTAs. Every evaluated library but libgdx is a port from a desktop game library. Thus, developing applications that run on Windows, Linux, Solaris, Mac OS X, on Android devices and in browser via applets is simplified. Further, Libgdx was even designed to make cross platform development as simple as possible, enabling the developer to write the same code for any device. As a result, a developer can first create and test applications on the desktop and then deploy them to Android by changing only a few lines of code. Unfortunately, all libraries but libgdx do not implement all of their features on Android yet. This means that either developers build simplified applications for both devices or just strip out advanced features in Android applications.

Figure 13 - Models used in the prototype application

After all, the previously mentioned Java-based libraries still need to be improved and fixed to be considered for developing professional games. None of the libraries scored even near to 100 percent in the framework evaluation, although the evaluation only targeted rather basic functionality for creating a prototype application. JPCT-AE seems to be best suited for being usable to create professional 3D games while libgdx still might be the first choice to either create 2D games or, at least, 3D games that do not make use of animated models. Although the main developer of libgdx uploaded a video in which several animated MD2 models were rendered at 30 FPS on a Motorola Milestone [50], the package for loading MD2 models, which is available as a library extension, did not seem to be runable using the latest version of libgdx, and therefore could not be tested and evaluated.

3 Game Prototype

A game prototype called AR Legends is developed as part of writing this thesis to prove that MMMGs using LBAR can be created and used on currently available above-average smart phones. Although the prototype is tested rudimentary on the Motorola XOOM tablet, running the application on Android 3.x+ devices is not mandatory.

3.1 Application Requirements

Several requirements need to be met to successfully demonstrate that the AR prototype application works as expected and that it can be used by non technical people. First and foremost, the application needs to run on most popular Android based Smartphones. Thus, no devices specific APIs or special hardware that is not implemented by all phones, such as Gyroscopes, must be required to run the prototype. While the application is mainly tested with a SGS and Android 2.3.3, all Phones that run at least Android 2.1 Update 1, and implement an Adreno 205[58] or PowerVR SGX540[59] GPU and a 1GHZ processor, should not be limited by processing power in any way. It cannot be guaranteed that less powerful devices can maintain a frame rate of 30FPS, though. The Adreno GPU, for example, is built into most of SonyEricsson’s Smartphones released in 2011, such as The Xperia ARC S and the Xperia PLAY. Furthermore, while the application is installed on a XOOM for testing purposes as well, the application is not intended to be used on tablets or any other device with an HD resolution or even more. Finally, although the prototype application is not intended to be distributed to any consumers, it should be designed so that it can easily be distributed via the default Android market without any further configuration steps. As a consequence, no root access to the system is required.

Another fundamental requirement is the capability to display 2D and 3D content in real time. All content that is part of the game needs to be visualized and interactive. While displaying 3D and 2D content forms the basis of the prototype application, including the device orientation and the GPS coordinates is required to build a LBAR application. Any further details on these topics are given in chapter 2.

As the prototype is a massively multiplayer game, network management and data synchronization across multiple local game sessions forms the most complex part of the prototype. The application is a location based real-time game and, thus, a lot of data needs to be synchronized almost in real-time between participants that are near to each other. Therefore, as data could be shared at any given time, opening and maintaining persistent network connections is required.

Basically, these network connections could either be formed by establishing ad-hoc connections directly between client devices or by maintaining persistent connections to central game servers. While ad-hoc connections are quite common for mobile games as interfaces like Bluetooth, WLan, NFC, or infrared are oftentimes implemented in mobile phones. At first, these ad-hoc connections appear to be the obvious choice as connections mainly need to be established when participants are near to each other. However, Centralized game architectures have other advantages. Information can always be sent to the server and, therefore, data can possible be exchanged between all participants at any given time. What is more, all data traffic can be regulated and filtered by servers that are controlled by the application developers. In the context of mobile multiplayer games, the major drawback of using a centralized game architecture is a higher delay. In the end, a combination of a centralized architecture and ad-hoc networks would possibly be the best choice, but for the sake of simplicity, a centralized game architecture is chosen solely.

Finally, the last requirement for the prototype to work is that there has to be an administration interface that can be used to place game specific content in the virtual scene easily. This functionality is not possible to integrate in the Smartphone application and, hence, the development of a web-based administration tool is required. Thus, the whole game scene can be administrated from any computer by using a modern browser. This administration tool should be as simple to use as the prototype application as it should be usable by non-technical people and people that were not involved in the development process or the prototype.

3.2 Game Basics

The player assumes the role of a hero who explores an augmented version of the reality by fighting creatures and finding new equipment. The player moves in the real world using a smart phone. Here enemies or chests are blended on top of the camera image, depending on the orientation of the Smartphone and the geographical location of the player.

First a user needs to register and to create an account before playing the game. From that point, all actions a participant might take while logged in to the game are recorded and monitored at the server. Once the game starts, the players can either play the game alone or in a group with other people.

The game uses the real word – in the test scenario, the game is limited to Graz (Austria) – and divides it into small areas. Players can only see and interact with enemies that are in the same area they are currently in. Figure 14 shows an example of areas and assigned creatures in the Admin user interface.

Figure 14 – Areas of Interest and Creatures in Admin UI

Areas serve multiple purposes in the game:

Firstly, areas can possibly form the organizational basis of distributed load balancing for game servers. These areas are referred to as Central Server areas (CSAs). In large MMOGs game areas are divided into multiple regions which themselves are assigned to one or many servers that handle all game procedures that happen within these regions. Thus, Areas in the prototype might be usable as natural units that can be compared to MMOGs regions.

There is a total of two types of areas in the game:

On the one hand, areas of interest (AOI) cover places in which a player interacts with enemies. A player cannot see enemies that are located in these areas unless he entered them in advance. Interactions between players can only happen if all of them stay within the same AOI. Once a player leaves such an area, creatures stop fighting him and go back to their start positions or fight other players that are still in reach. As most of the critical game actions – like fighting – happen within these areas, fast data synchronization between players and the servers are of greater concern. Unlike CSAs, AOIs can be widespread and do not need to border each other. Therefore, it is possible that players which are logged in are not located in any specific AOI while traveling the world. Further, as an AOI can only be assigned to a single CSA only, it must not overlap multiple CSAs.

On the other hand, CSAs cover the whole playing surface of the game and are each directly linked to one central game server. Each CSA can cover several AOIs, though. Every joined player is connected to a CSA that covers his or her current location. In addition, every CSA is linked to its surrounding CSAs. Once a player is about to leave his CSA, the currently active CSA server checks the direction the player is heading to and sends the physical address of the next areas’ server. Figure 15 shows several CSAs and AOIs in the administration tool.

Figure 15 - CSAs containing several AOIs

Secondly, as the application does not know about the real environment, AOIs can be used to take natural limitations to the sight into account. By default, the application would display creatures even if they were behind a building. By using AOIs these problems could be solved. This principle is further outlined in Figure 16.

In the picture, the current player is displayed as the green figure. Here, it can be seen that withouth using AOIs, the application only knows about distance and direction to nearby enemies. It can not determine on ist own, however, if there are any physical barriers that would prevent the user from actually seeing these objects. To encounter this problem, only objects that are placed within the same AOI as the user are displayed. As Figure 16 suggests, even if objects are within the same AOI, it is not certain whether the object would be hidden if they were real. Therefore, virtual lines need to be computed that range from the current position to each object that is placed in the current AOI. If these lines cross the border of the polygon-shaped AOI, they are considered to be out of sight.

Thirdly, areas work as a performance optimization tool on the client devices. Creatures that are outside of the current AOI cannot be interacted with and are not displayed at all. This way the game administrator can assure that not too many objects need to be rendered and computed at once. To view objects that are located in other areas is only possible using the spyglass mode in the game. This mode lets the user zoom the device camera image and the 3d scene, but does not allow for any further interaction. There are other solutions of reducing the number of visible objects, however. The simplest approach to this, for example, would be to only show any virtual objects that are within a certain distance to the current location. While this approach would be easier to implement and to maintain, it would not work together quite well with the game design.

After all, the main aspect of the game is to play in a party of up to five people. The virtual champions need to use their skills to maximize the strength of the group. Hence, every member of a party should take one or several particular roles by focusing on some skills that are available in the game. While the game is focused on playing with a predefined set of people, a player is always free to leave or join a group as long as no game limitations (e.g. max five people in a group) are exceeded. Although in some situations groups might join together to fight extraordinary strong enemies, direct interaction with other players is only possible with players in the same group.

In this respect, interactions with team members mainly occur when a player heals or improves avatar statistics – like defense or attack damage – of one or more party members. The exact position of the party members is not taken into account in the game as the GPS system is not accurate enough anyway, although players need to stay within a certain range to be members of a particular party.

Therefore, players are always connected to a global server, specified as the Central Account Server (CAS) to which they logged in. Via this server someone can find and chat with other players and form parties. As long as a player is in a specific area, he or she is connected to the respective CSA server in addition.